- Home

- Hardware

- SDKs

- Cloud

- Solutions

- Support

- Ecosystem

- Company

- Contact

news

Espressif’s New Voice Assistant, ESP-Skainet, Released

Shanghai, China

Aug 30, 2019

With ESP-Skainet, users can easily build applications performing wake-word detection and processing speech-recognition commands.

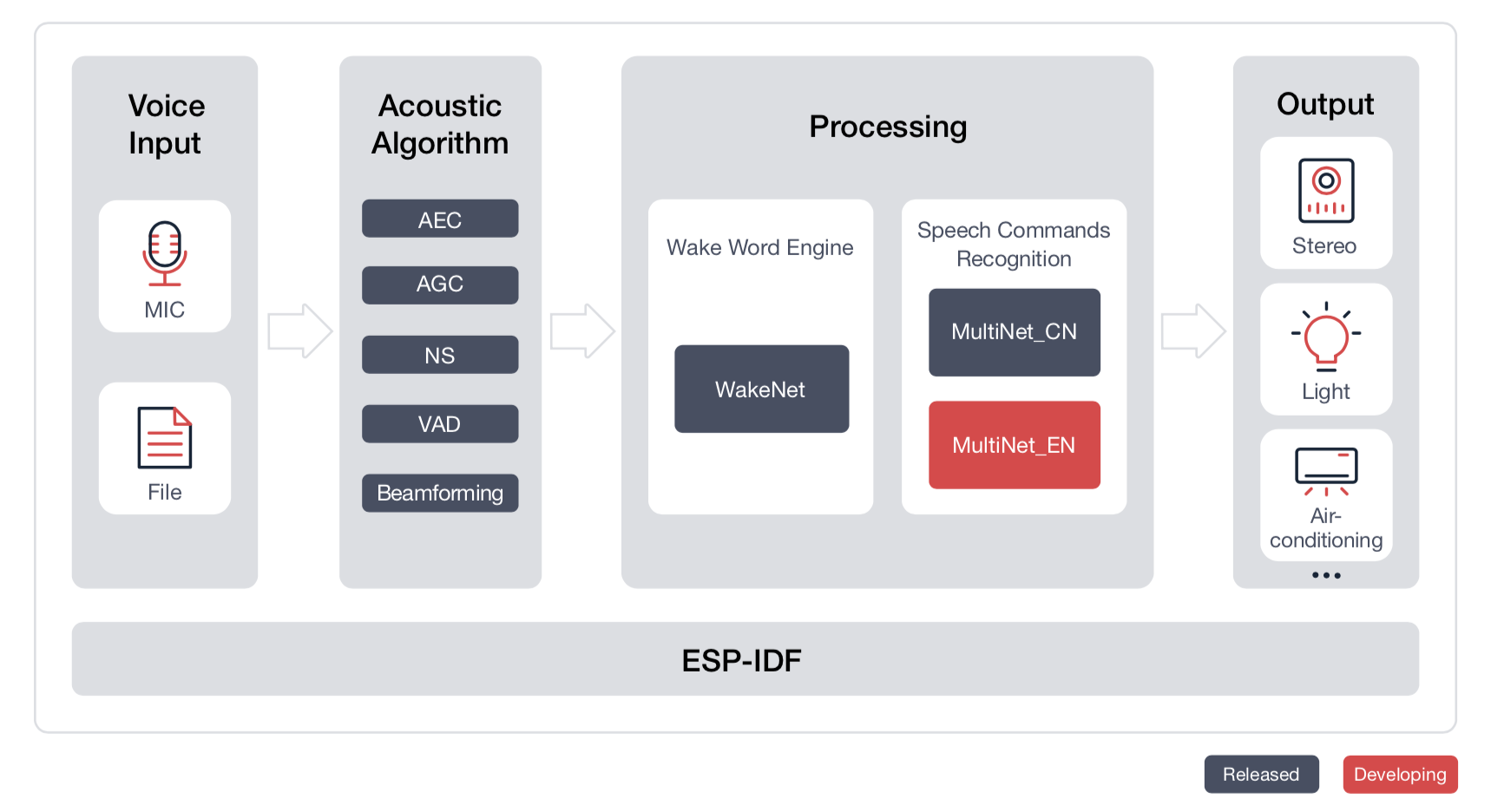

ESP-Skainet is a new voice-interaction development framework based on Espressif’s flagship chip, ESP32.The new development framework supports voice wake-up and multiple offline speech-recognition commands. The new development framework is also based on Espressif’s WakeNet and MultiNet.

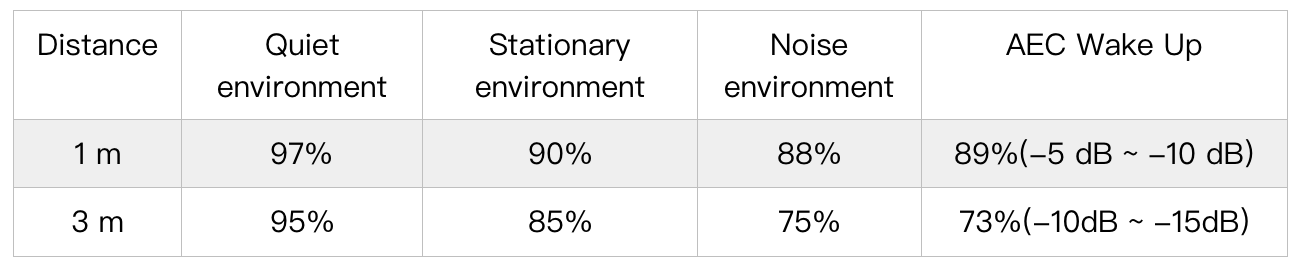

WakeNet is Espressif’s voice wake-up engine. It achieves a low memory usage of approximately 20KB, as well as a high calculation speed. WakeNet has been specifically designed for low-power embedded MCUs, providing users with excellent near- and far-field performance. Taking LyraT-Mini as an example of a development board that is small in size, yet highly versatile and powerful, the currently achievable wake-up performance in a quiet environment is a whopping 97% within a one-meter distance between the development board and the user, and 95% within a three-meter distance between the development board and the user, as shown in the table below.

Currently, Espressif’s voice wake-up engine supports up to five wake-up words. Espressif provides its customers with the official wake-up word “嗨乐鑫” for free. This translates in English as “Hello Espressif”; it is pronounced as “haɪ ləʌˈʃɪən” and is transcribed in pinyin as “Hi Lexin”. Espressif also supports customer wake-up word customization. For details regarding the wake-up word customization process, please refer to “ESP_Wake_Words_Customization”.

MultiNet is a lightweight model which allows ESP32 to perform offline speech-recognition of multiple commands. MultiNet’s design draws on Convolutional Recurrent Neural Networks (CRNN) and Connectionist Temporal Classification (CTC). MultiNet uses an audio clip’s Mel-Frequency Cepstral Coefficients (MFCC) as input, and the phonemes of that audio clip, which could be either in Chinese or in English, as output. By comparing the output’s phonemes, MultiNet can identify the relevant Chinese or English command. For the time being, up to 100 spoken commands in Chinese, including customized ones, are supported.

What’s more, users can easily add their own voice commands, without having to train the model again. No network connection is required. While ensuring the security of user information, the commands can be quickly implemented. Commands in English will be supported in the next edition of MultiNet.

LinkedIn

LinkedIn 微信

微信

Twitter

Twitter Facebook

Facebook